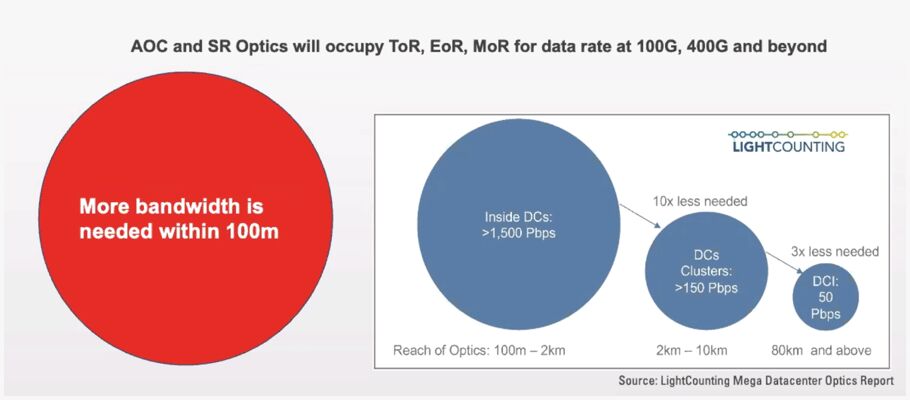

The challenge in planning for exponential growth is the increasing frequency and disruption of change. Even as hyperscale and multi-tenant data center managers begin migrating to 400G and 800G, the bar has already been raised to 1.6T. The current explosion of cloud services, distributed cloud architectures, artificial intelligence, video, and mobile-application workloads will quickly outstrip the capabilities of 400G/800G networks now being deployed. The problem isn’t just bandwidth capacity; it’s also operating efficiency.

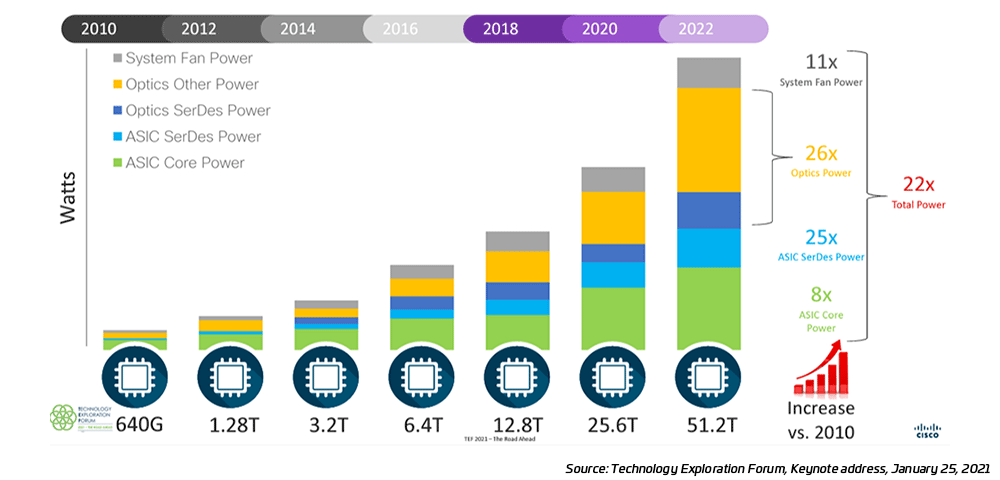

Driven by increased power needs, networking accounts for a growing portion of overall delivery costs inside the data center. Today’s network switches are unable to support the power requirements demanded by tomorrow’s higher-capacity networks. Therefore, next-generation network components are looking to reduce their per-bit power consumption, which is projected to hit 5 pJ/bit eventually.

This is where the move to 1.6T is such an important step. Among other things, 1.6T offers more energy-efficient networking—helping operators meet the demand for more application capacity at reduced costs. Entonces, ¿cómo podemos conseguirlo? Here are some thoughts.

Start with the switch

Network switches, the most numerous powered devices in the network, are among the biggest consumers of power, with the electrical signalling between the ASIC and optical transmitter/receiver using the most. As switch speeds increase, the electrical signalling efficiency decreases—limiting switch speeds to 100G currently. Being at least three years away from having serial I/Os capable of supporting 200G lanes, some network managers are deploying more higher density (radix) switches.

Others, however, argue for more point solutions—such as flyover cables versus printed wired boards (PWBs)—to address the electrical signalling challenge and enable future pluggable optics. Other solutions involve using OSFP-SD to double lane counts and increase the signalling speed to 200G. There are also those who advocate that a platform approach is needed to support long-term growth.

The role of co-packaged and near-packaged optics (CPO/NPO)

A more systematic approach to radically increase density and reduce power per bit is co-packaged optics (CPO). CPO advocates contend that sufficiently reducing power per bit for 1.6T and 3.2T switches requires new architectures featuring CPOs. CPO limits electrical signalling to very short reaches and eliminates re-timers while optimizing FEC schemes. Bringing the technology to market at scale, however, requires significant re-tooling across the network ecosystem. New standards would greatly enhance this industry transformation.

The bottom line with CPO is that it will take time to mature. The near-packaged optics (NPO) model may offer an interim step that could be easier and quicker to bring to market—assuming the industry’s supply chain can adapt. Many argue that pluggable modules continue to make sense through 1.6T.

200G electrical signalling and the need for more fiber

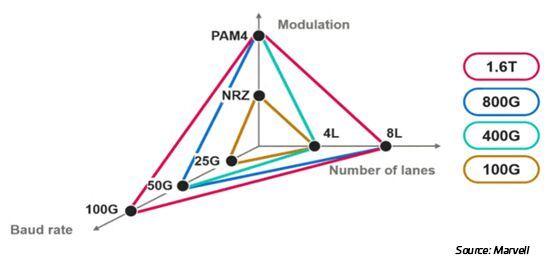

Getting to the next switching node (doubling of capacity) can be done with more I/O ports or higher signalling speeds. Each option has benefit, depending on how the bandwidth will be used. Having more I/Os increases the number of devices a switch supports, whereas higher aggregate bandwidth combinations can support longer reach applications using fewer fibers.

Recently, the 4x400G MSA suggested a 1.6T module with options of 16 x 100G or 8 x 200G electrical lanes and a variety of optical options mapped through the 16-lane OSFP-XD form factor. A high radix application would require 321 duplex connections at 100G (perhaps SR/DR 32) while longer reach options would meet up with previous generations at 200G/400G.

Potential paths to 200G lanes

While suppliers have demonstrated the feasibility of 200G lanes, customers have concerns regarding the ability to manufacture enough 200G optics to bring the cost down. Re-producing 100G reliability and the length of time needed to qualify the chips are also potential issues.2

Whichever route migration to 1.6T takes, it will inevitably involve more fiber. MPO16 will likely play a key role as it offers wider lanes with very low loss and high reliability. It also offers the capacity and flexibility to support higher radix applications. Meanwhile, as links inside the data center grow shorter, the equation tips toward multimode fiber, with its lower-cost optics, improved latency, reduced power consumption and power/bit performance.

So, what about the long-anticipated predictions of copper’s demise? At these higher speeds, look for copper I/Os to be very limited, as achieving a reasonable balance of power/bit and distance isn’t likely. This is true even for short-reach applications that will eventually be dominated by optical systems.

What we do know

All this to say that much of the necessary journey to 1.6T is still up in the air. Still, aspects of the eventual migration to 1.6T are coming into focus.

1 OSFP-XD MSA included options for two MPO16 connectors supporting a total of 32 SMF or MMF connections

2 The right path to 1.6T PAM4 optics: 8x200G or 16x100G; Light Counting; diciembre de 2021

Migración a 400G/800G: el archivo de hechos - Parte 2

400G/800G ha llegado con una migración de 1.6 T cerca. ¿Cómo pueden los data center multi-tenant y de hiperescala adaptar sus diseños de cableado y conectividad para prosperar?